|

|

RepeaterBots |

The old RepeaterBot site, which describes a Q-Learning approach, is now here.

The Goal

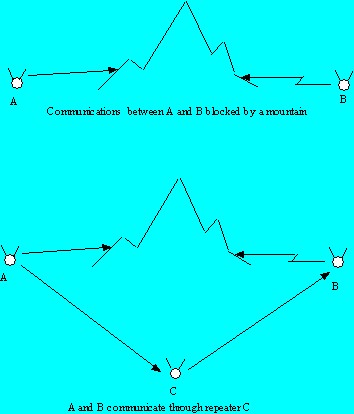

The goal of the RepeaterBots project is to construct controllers for simulated robots that carry VHF or UHF radio repeaters into unexplored terrain. The robots' mission is to explore a new area and provide full radio coverage over it via the repeaters they carry.VHF and UHF radios are limited to a large degree to line-of-site communications. To provide communications in a hilly region, radio repeaters can be used to pick up a signal from some source A and retransmit it to a receiver B that is located in some area blocked from receiving signals directly from A. Repeaters are typically placed on the top of a mountain or tower; however, a repeater can be placed near the end of a ridge to provide communications to and from radios on both sides of the ridge as illustrated below in Figure 1.

Using autonomous robots to place repeaters would be useful in a number of applications. For example, robots exploring the landscape of a distant planet could share information and strategy over their radio links. In hilly terrain, any particular robot might not be able to hear all the other robots due to signal blockage. However, intelligent robots could position themselves to facilitate exploring while still maintaining a wide coverage, repeater-based network.

Previous Work

I originally used a Q-Learning approach to attempt to construct simulated

robots

that would explore a landscape and position themselves to provide full

radio coverage of an area. That approach, described

here, did not provide an adequate solution

to the problem. It was not possible, in general, to construct a

table of Q-values that would lead the robots to solve all the simple

test terrains because of the ambiguity in their state and sensory information

and randomness between trials.

A better solution was needed that would allow robot cooperation and

flexibility.

Self-Organizing Particle System Approach

Rodriguez and Reggia¹ refer to systems of autonomous agents whose

actions are governed by the local environment and other local agents as

"self-organizing particle systems." Examples of such systems include

systems that mimic those actions performed by birds flocking and ants

gathering food. These authors extend the idea of self-organizing particle

systems, where movement through space as a "superorganism" is the goal,

to a more general problem-solving methodology.

This version of the RepeaterBot system is based on a simple self-organizing particle system. One of the primary features of the system is the way agents explore the environment. Instead of flocking behavior, where agents move together, the RepeaterBot agents tend to move away from the center of their local group of agents. This behavior might be called "reverse flocking." Web² uses the term "inverse flocking".

In this system, locality is not based on the physical distance between agents. Because groups of agents can communicate with other agents they can contact via their radio and because agents can pass messages through other agents to reach agents they cannot directly hear, locality for an agent is defined by the group of agents it can hear, either directly or indirectly, or some subset thereof. A group of agents that can communicate with one another is called a cluster.

When making move decisions, the agents in a cluster first communicate their positions and then compute the "center³" of the polygon formed by connecting them. They tend to move away from the center of the cluster, causing the agents to explore for agents they cannot hear by "fanning out". This behavior, combined with other simple control motivations, allows the agents to solve simple repeater coverage problems.

System Details

Figure 2 shows a schematic of the simple 2-level control approach used

by the agents. The controller modules fill in an 8-element array where

each element represents one of the eight grid squares surrounding the

agent. At the lower level, the controller fills in the array to

reflect barriers and squares that the robot has visited before. (The

location history is used to implement a simple analogy to an organism

that leaves scent to mark its trail.)

Figure 2 - Two-level control approach

The higher-level controller modifies the array to reflect behavior based on two parameters. First, if the agent is in a cluster, the higher-level controller indicates a preference to move away from the center of the cluster. Second, it gives a preference for "inertia" where an agent moving in a certain direction tends to continue in that direction.

The array values are small integers representing the goodness of taking a particular move. The agent selects the direction to move based on the highest goodness value in the array. If the highest value occurs in more than one array cell, the cell and its corresponding direction are selected randomly from the cells with the highest goodness value.

Preliminary Results

For initial testing of the approach, terrain mazes similar to those

used in the Q-learning version of the system were used; however,

the grid size was changed from 30 x 30 to 50 x 50. The

old grids were extended by lengthening

the barriers and modified to increase their difficulty.

The new RepeaterBot system typically solves all 5 mazes in under 500 iterations. Maze 1 is the most difficult to solve. This version of the system scales much better than the Q-learning approach. By adding an extra mobile agent, solutions are found for all the mazes in less that 200 iterations.

The simulator supports two types of clusters. In the first, locality for an agent is defined by all the agents it can communicate with, either directly or indirectly by using repeater techniques through other agents. In the second type of cluster, the locality for an agent is defined by all the other agents it can hear through its radio directly. Experiments are in progress to compare these two methods.

The simulator also supports a stochastic method to temporarily inhibit certain high-level control preferences when an agent senses it is repeating a cycle of moves. Initial results tend to show that this technique is useful in reducing the number of iterations required by the simulator to solve the repeater placement problem.

Future Work

This work is preliminary and will provide the basis for continuing research.

The set of test mazes will be extended and tests will be performed comparing

the average problem solution time for different RepeaterBot configurations

against the minimum number of moves to solve the problem.

The cluster technique for exploring needs to be refined so that agents can solve more complex mazes. I plan to extend the simple agent controller to include additional decision techniques and add a machine learning component so that the agents can be trained to operate efficiently in a particular type of environment by learning to use the most appropriate decision elements from its set of control techniques.

In addition, other experiments are planned, including analysis of scalability and the effect of the number of agents on problem solution time.

Footnotes